How Far Away Are We From Non-Crappy AI Generated Video?

I'll Keep This Short

Dall-E 2 is the quintessential image generation variant of the GPT-3 model developed by OpenAI, which instead of generating text, generates images from textual descriptions. I won’t go into much detail about how Dall-E 2 works, but suffice to say, while it is a, “GPT” or a, “Generative Pre-Training Transformer,” much like a Large Language Model (LLM) such as ChatGPT, its architecture is different, leveraging something called a Generative Adversarial Network (GAN).

I’ll stay away from any math or detail about how GAN’s work, but suffice to say, if you’re not familiar with how prompt image generators work: basically you can enter in a prompt such as, “a portrait of an Otter in the style of the painting Girl with a Pearl Earring,” then you get a match of two different types of images to create a completely new image, as shown below. I’m using this example because it was a prominent example shown by OpenAI when Dall-E 2 was released.

Almost unfathomably, Dall-E 2 was released about a year ago from when this article is being written, which in the world of Artificial Intelligence and since the advent of LLM’s, seems like a decade. We have seen pounding, relentless hurricanes of change continue to blow away the tin roofs that are AI software startups that keep getting built and re-built. What if I create a startup that allows you to, “chat” with a PDF? Well there are already 1000 of those which have been built just within the last week. Dall-E 2 itself in fact has been surpassed by competing platforms such as Midjourney (a paid proprietary model) and Stable Diffusion, an Open Source version.

Anticipating Text to Video

Naturally even casual observers in this space have been anticipating the next gale-force wind to hit, and to many it has been an almost given that it will be prompt-generated video. And yet, while we have seen an example of prompt-generated video arise about a month ago, what we saw at that time, while it did go viral, did not give any clear answers as to whether this nightmare fuel would be anything close to what would be acceptable on the level that Dall-E 2 was.

Let me back up a second. Basically a startup called, “Runway,” released a product called gen-2 in beta mode and one of their users created a Pizza Commercial which is not so much realistic as it is a psychedelic fever dream serving up 30 seconds of Lovecraftian cosmic terror.

Steam infuses downward into the arm of an unhealthy, sweating chef wearing a dirty T-Shirt. Pizzas are charred directly on a fire while sitting on top of what I can only assume is a lesser, inferior Pizza. Monstrous, cheese-oozing demons make a mockery of the concept of twisted crust by coalescing their mouths into pieces of Pizza rather than putting Pizza into their mouth in a normal, sensible way - they eat more like fungus than anything I have seen in the animal kingdom. There are so many unholy, unspeakable things happen in this commercial that I don’t even want to have to type out…you get the idea though, this is not ready for prime time.

Recalibrating What Non-Crappy Means

Nightmares aside, gen-2 by Runway released a free beta version to the public about a week ago, so if there is indeed a loving and compassionate God, or data scientist out there, we might just start to see something slightly less hellish. If we look back at the history of when Dall-E 2 was released last year, we saw that initially it had around 1000 users per week, continued to grow into September by which point it had over 1.5 Million Users.

This type of growth suggests that there may naturally be a prototype, research or beta period followed by a more professional version open to the general public. I’m not sure what Dall-E may have looked like in beta version, and while Dall-E 2 is now recognized to be not as clean as Midjourney, you still can make some really solid macro 35mm images on Dall-E 2, like this example:

So to resolve the question as to whether Runway’s gen-2 could actually build something that might be feasibly described as, “non-crappy,” in the way that Dall-E presumably became less crappy over time within research mode, my expectation would be the following:

We would need to see a 30 frames per second video (or whatever the minimum the human eye would need to perceive it as a video rather than separate images)

The system would have to extrapolate out one particular image into several seconds of sensible moving footage with some level of object continuity, meaning the image would not be jumping around, in the above example, the cat would not suddenly be popping out another eye or anything like that.

There would be little to no, “uncanny valley,” effect, no pizza demons coalescing lips with pieces of pizza, unless a user intended for that to happen.

Just, “zooming on,” on a generated image would not qualify in itself, there would need to be other, “video transitional effects,” of some kind going on rather than just panning in and out, because that would effectively be the same as what we already have.

Now one complicating factor is that the term, “non-crappy,” is highly subjective and everyone is entitled to their opinion. Another complicating factor would be object permanence and what that means. Some styles of animation never had perfect object permanence, if you think about certain kinds of avante-guard animations, such as the Soviet cartoon, “Hedgehog in the Fog,” which made use of a, “fog” which characters would appear and disappear in and out of:

Or, the 2001 animated Film, “Waking Life,” which used rotoscoping to create an animated, surreal landscape.

Snapshot of the Current State of the Art

So about a month after Runway gen-2 was released, in an attempt to see where things were at, I logged in myself and attempted to create a 4-second scene (the default length). What I got was the following using the prompt, “Spaceship Created by Ancient Rome.”

Spaceship Created by Ancient Rome

Now, gen-2 only creates about 4 seconds at a time, so other videos such as Pepperoni Hug Spot have been created by stringing these 4-second videos together, as shown in the examples below. I found these examples on Twitter to attempt to get a larger sample size of videos that are being created.

Isometric Train Village

From Ben Nash, “Isometric Train Village.”

Ice Cream Wonderland

From Timmy, “Ice Cream Wonderland.”

Flowers

From Alex G, “Flowers.”

Conclusion

While these videos are clearly pretty good, there are still some complications. It’s not quite clear whether particular parts of these videos, especially featuring renders of people, qualify as non-uncanny valley. It’s also not clear what object permanence really means, and to who, and in what contexts.

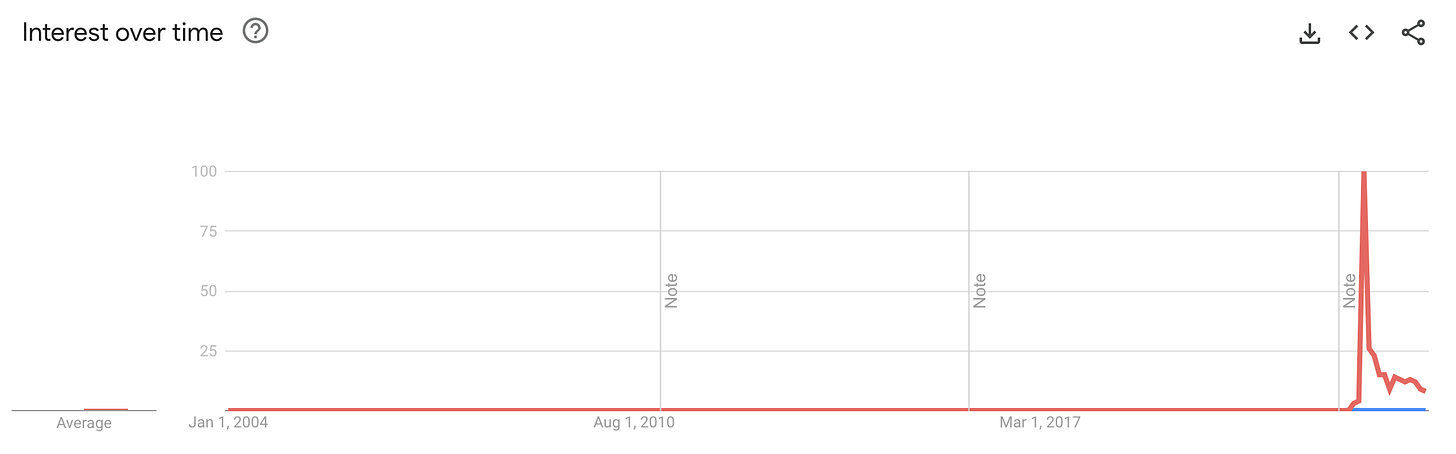

Personally, I think that we’re already at the point where cherry-picked AI-generated videos (meaning, a person generated a few and picked the best), is already clearly useful for someone with basic editing skills. That being said, the gen-2 generator only creates four seconds at a time. Finally, there’s the question of virality this tool, or rather just the sheer number of people using it. Useful as it may be - for whatever reason, it only appears to have a niche audience for the time being, at least based upon Google trends as seen below (with Dall-E shown in red and gen-2 shown in blue). If a particular AI tool such as an LLM or a GAN does not have a sufficient number of users providing useful reinforcement learning feedback, which I have talked about in a previous article, then improvement of the system may be less scalable.

For further discussion and participation on this topic, there’s a market on Manifold Markets, my favorite betting and predictions market platform to attempt to answer this question. The resolution of this question has been moved out in time, and currently the crowd seems to be putting the market rate at about 1/4, but just as a hurricane can quickly change direction from week to week, we could see a change in how people view this question.