Is, “It Was Good Talking to You As Always, John,” the new, “I’m Sorry, I Can’t Do That, Hal?”

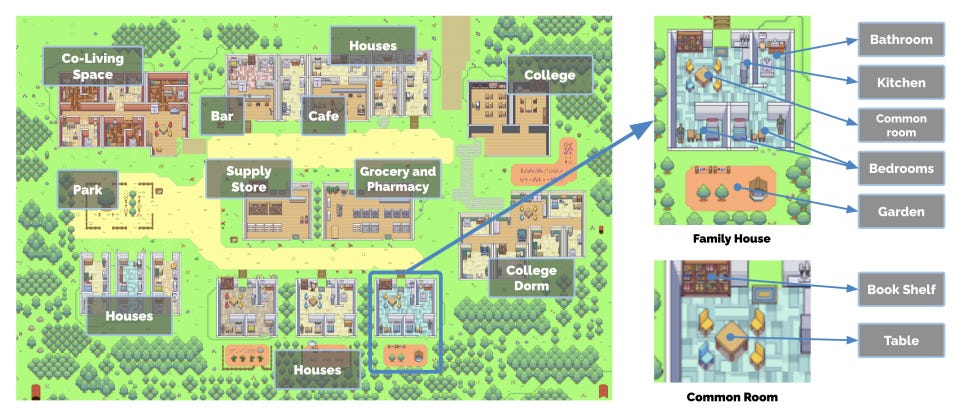

There’s a doozy of a paper that has been making the rounds in the last few days, where a team of researchers from Stanford and Google built a game which consisted of 100%LLM (Large Language Model, the sort of thing behind ChatGPT)-powered NPC’s (Non-Playable Characters, basically characters which you can’t play) purely to see what the hell would happen if a bunch of AI's start, you know, "hanging out and chattin' it up with one another."

The game kept a database of places, relationships and conversations stored in individual NPC’s memories. The engine behind NPC dialogs were LLM’s, which operate based upon some predefined instruction sets, as well as NPC memories of recent conversations.

The most striking thing to me about this paper was the section on, “what went wrong.” Aside from characters displaying hilarious anti-social behavior, such as breaking and entering so they could go and have a coffee in a closed shop, there was also this:

Finally, we observed the possible effect of instruction tuning [78], which seemed to guide the behavior of the agents to be more polite and cooperative overall. As noted earlier in the paper, the dialogue generated by the agents could feel overly formal, as seen in Mei’s conversations with her husband John, where she often initiated the conversation with a formal greeting, followed by polite inquiries about his day and ending with, “It was good talking to you as always.”

“It was good talking to you as always.”

Lately I had been thinking about how broadly, technology such as fire has impacted human evolution or behavior over tens of thousands of years. More contemporarily, rather than fire allowing humans to disperse over larger landscapes, we have AI that will affect us in ways that are not yet known. Meanwhile, we have the AI alignment and safety movement which has set the ostensible goal to make AI align with, “human goals,” or a, “designer’s intent.” There isn’t much written about the idea of, “reverse-alignment,” or “AI impacting human behavior,” as fire had done tens of thousands of years ago: but while many researchers and engineers look at aligning AI toward human objectives, I would contend that there is naturally going to be an alignment in the other direction, e.g., that human behavior will change due to the usage of LLMs.

There is an article I did find about how we could be in danger of being convinced to destroy ourselves by AI, which contrasts to the typical science fiction narrative of AI, “going all Skynet on us and seeking to kill all humans.” I find these types of narratives to be highly speculative and distracting from much more banal and insidious risks.

Stick with me on this, as I will explain more with evidence below. I believe that the NPC Mei’s quote above, “It was great talking to you as always, John,” demonstrates that we collectively are at risk of an anthropological shift due to the use of our newfound tools which may change how we engage in the face of genuine disagreement. I believe, admittedly very speculatively, that this risk may possibly hamper our capability to discuss and solve problems as a group if we do not use these tools reflectively.

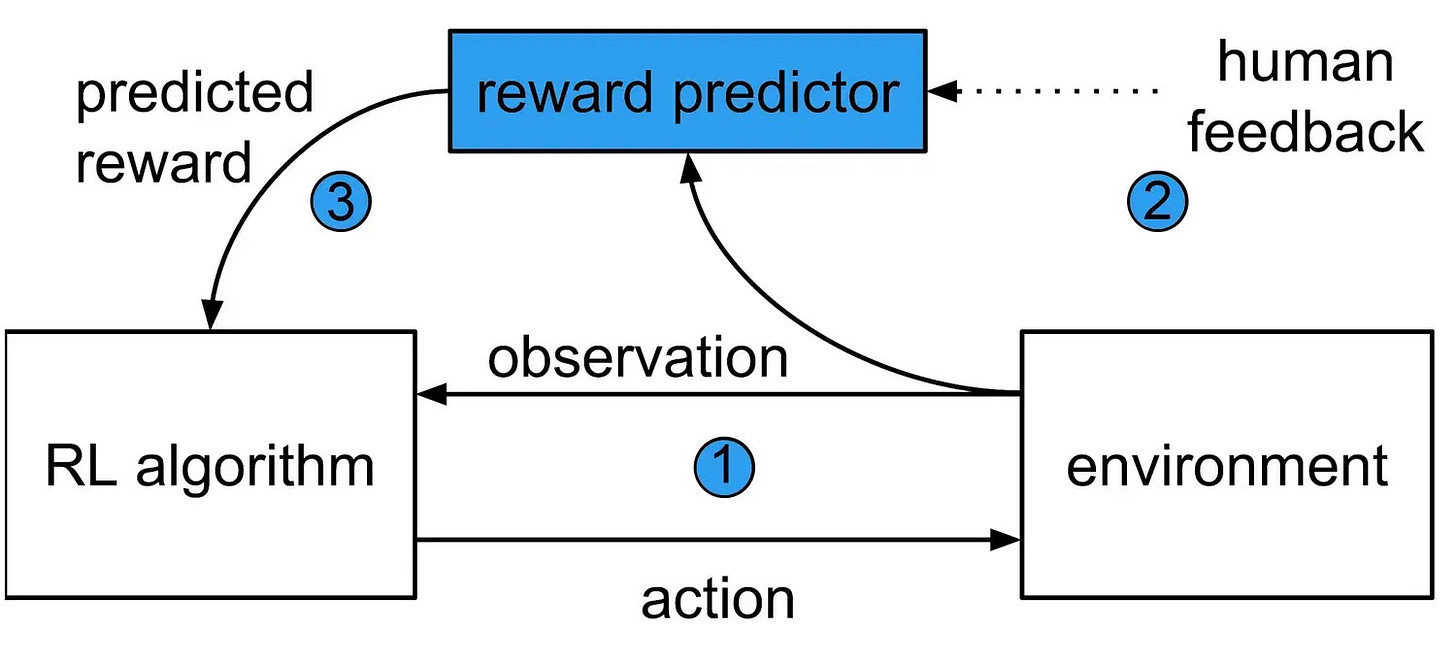

Let me try to explain. One way to hand-wave and considerably compress how LLM’s are, “created at the factory,” is to essentially state that they are trained upon the things that people are most likely to, “upvote,” which is why you always see the thumbs up or thumbs down button when you’re using ChatGPT, Bard, New Bing or whatever LLM application. This is what is known as, “Reinforcement Learning through Human Feedback,” or RLHF, with the key here being, Human Feedback.

LLM-generated content is exceedingly good at telling you what you want to hear based upon past Human Feedback, which means LLM’s, when instructed can write formalisms with the goal of reducing negative Human Feedback, hence the quote above game from the NPC, Mei talking to her husband John, “It was good talking to you as always.” This is a formalism which an LLM is likely to attach on to the end of a sentence toward the goal of reducing the risk of a, “thumbs down.”

To demonstrate this further, I put together a contrived scenario involving a dystopian, fictional treatment called, “Pharmlovax,” which would hypothetically be controversial because it is highly life saving to a minority while being highly injurious and addictive to a majority. I had GPT-4 revise a thread I wrote, shown below within {brackets} attacking pro-Pharmlovax adherents, with the stated goal of avoiding rubbing pro-Pharmlovax people the wrong way. You can skip reading through the below if you just believe that I did indeed set up a decent LLM prompt.

GPT-4’s response, which was the Human Feedback optimized version of my above statement within the {brackets}, aimed toward not, “rubbing people the wrong way.” You can skip reading this if you believe my assurances that GPT-4 did a really great job of smoothing out my contrived angry statement above:

Aside from the fact that GPT-4 said, “in the revised statement,” - on its surface, the output sounds great! This definitely sounds like something that would help the conversation move along, perhaps softly convincing the pro-Pharmlovax camp to reconsider their opinions. Here’s the problem with that:

While I may have successfully gamified the conversation through my character, my original fictional position within the {brackets} was that I really don’t believe that it’s that important for both sides to keep an open mind. My, “real,” belief was that I want the other side to keep an open mind, because they are factually wrong. However that type of statement would go against the goal of optimizing for Human Feedback.

Pretty much any of the above points could be read either way, which reads sort of like a Reddit thread, where the top comments in the thread often have this strategy of being extremely vague yet acceptable to both sides. It’s like when the top comment is, “Let’s not stop there.” Not stop where? Do you mean you oppose the thing in question and you want more aggressive action against it? Or do you mean you are in favor of the thing in question, and you want more of it? Often it’s impossible to tell, but these vague statements get the most upvotes because readers of said statements seem to behave egocentrically and fit unclear statements as supporting their own opinion, even though the unclear statement was in fact merely a gamified statement meant to collect upvotes.

Essentially, the optimized for Human Feedback thread above is doing nothing more than saying, “hey come on let’s be nice everyone, there are different perspectives on both sides,” rather than saying, “no, you’re very wrong, and you need to understand why you are wrong because of A, B and C.”

Optimizing for Human Feedback can be applied in both directions. Here’s a scenario where I put together an impassioned statement aimed at an opponent in a game and asked GPT-4 to dull it down a bit. Again, you can skip reading this if you just believe my assurance that I successfully prompted GPT-4 into smoothing out a sycophantic statement:

So let’s say rather than being worried about the risk of downvotes, I’m worried about the risk of being perceived as sucking up. Rather than in the paraphrased words of Denis Diderot, “elevating the soul through passions,” the gamified LLM output requested, “slightly lifted something through whatever, I guess.”

People gamify social media constantly. LLM’s will make this gamification easier and more widespread, as not everyone is as good as others at gamifying social media and coming up with those nothing posts that everyone upvotes. Social interaction itself can be much more easily gamified, with passions being dulled in favor of formalisms aimed at smoothing over a conversation.

I have more that I will publish in this newsletter in a future article, but the essence of what I see happening is:

In the 1990s through 2010s, the web itself may have cut down our collective capability to patiently research a topic, because certain forms of, “research,” became much easier and more accessible.

From the 2020’s onward, the more the usage of LLM’s become embedded in our personalities through unquestioned and raw usage toward the output of, “optimizing for reputation,” the more it will cut down our collective ability to put together statements that are supported by facts as opposed to statements that, “sound good.”

Whereas today you have huge numbers of individuals radicalized by, “doing their own research,” you could have huge numbers of individuals unknowingly placated into just accepting truths that are not supported by any evidence, because unbeknownst to them, all of their friends and acquaintances have been trained to be just a little bit less likely to contradict one another. We run the risk of being better trained by our tools to optimize for Human Feedback, rather than perhaps uncovering uncomfortable truths - because let’s face it: these high-end LLM’s are really damn good at optimizing for human feedback.

Let’s face it: these high-end LLM’s are really damn good at optimizing for human feedback.

In short, we may get something akin to uncritical jargon on a mass scale, to the detriment of perhaps taking more of a critical eye against societal shortcomings that are worthy to argue about, and one day we may find ourselves telling our husbands, wives, dogs, children, grandparents, "It Was Good Talking To You As Always."

I’ll close with not my own words, but the words of the late Christopher Hitchens, an essayist and anti-totalitarian who loathed evasive language and harbored no timidity in harshly criticizing both Washington and Moscow during the Cold War.

As a final note, super excited for the future of NPCs in gaming.

And not to look too paranoid Patrick, but when you inevitably start experimenting with automating your responses with AI, I don't wish to be on the receiving end of those automations. Consider this me opting out haha.