Viva la Revolución Of...Open Source Large Language Models?

I'll Keep This Short

To a casual observer, it may seem that the micro-singularity of our age happened in December 2022 with the advent of ChatGPT and we have merely been living in that same age since. Looking a bit more closely at what has happened on the sidelines in early 2023 has brought up a potentially even more transformative duality about the already revolutionary times we live in. That is:

Will we be living in a world where we continue to use these state-of-the-art, centralized, jovian Large Language Model (LLM’s) applications such as ChatGPT, Bard, and Bing as they increasingly take over our lives and transform our world?

Or will open-source enthusiasts, akin to the the skilled tool and die makers of the industrial revolution, disintermediate these centralized powers with their own LLM’s?

I had posted an article in early April of 2023 about the staggering pace of Open Source Large Language Models (LLMs) that have been emerging this year, speculating that OpenAI’s newly emerged strategy, leveraging plugins and attempting to become a sort of destination site to compete with Google, might be rendered moot from said open-source LLMs.

Admittedly this was really a hot take at the time I wrote it, but as of May 5th, 2023 more evidence has emerged that what I had written may be a real, justified fear on the part of OpenAI and Google. Let’s look at a timeline of events:

March 2023 - The Leaking of LLaMA

In March, Meta's LLaMA proprietary LLM model was leaked to the public, sparking a surge of innovation within the open-source community. Despite lacking instruction and conversation tuning, the community rapidly developed variants with these features, solving the scaling problem and lowering the barrier to entry for training and experimentation. The open-source community's progress in image generation and language models has been driven by low rank adaptation (LoRA), a fine-tuning mechanism that makes model updates more efficient.

A hand-wavy way to describe what they have done in the paper linked above is that they essentially heavily compressed a llama, as shown:

Open-source models, trained on small, curated datasets, are now achieving quality comparable to ChatGPT. With models becoming more accessible and affordable, the dominance of major players like Google and OpenAI in the space is being challenged. Open-source models offer free alternatives without usage restrictions, making it difficult for companies to compete.

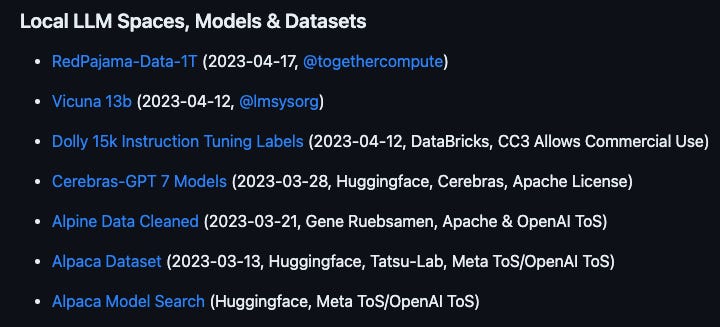

Here’s a list of some Open Source LLM’s from the link above:

And here’s a look at OpenAssistant, a chatbot which uses a 30-billion parameter version of LLaMa to create responses, compared to ChatGPT using GPT-4, using the same question.

So clearly there are some differences in the capability, with GPT-4, being the state-of-the-art technology for the time being, understanding what I’m getting at and providing a fantastic and creative response - but what is certainly not to be ignored is that LlaMA with OpenAssistant provides what would be described as revolutionary a mere 6 months ago, and it cost virtually nothing to develop.

OpenAssistant provides what would be described as revolutionary a mere 6 months ago, and it cost virtually nothing to develop.

Deep Dive on OpenAI’s Finances

Meanwhile, on May 5th, 2023 another article came out from The Information reporting that OpenAI had spent $540 million in 2022, mostly developing ChatGPT, with just $28 million in revenue, primarily due to high costs of training language models and recruiting technical talent.

The AI arms race is expected to drive expenses higher in 2023. Despite ChatGPT's success, OpenAI plans to invest more in cloud computing for language model training, while data costs will rise due to platforms like Reddit and StackOverflow charging for access.

So what we have here is a squeeze, where on the one hand, it is exorbitantly expensive to continue to develop and maintain the state-of-the-art technology, while on the other hand, using underlying technology that’s around 6 months behind is essentially, “free and open source,” and perhaps more importantly, if you’re a company or organization, you don’t have to share any of your valuable fine-tuning data with either Google, Microsoft or OpenAI.

The global generative AI market is predicted to reach $42.6 billion in 2023, but skeptics like venture capitalist Sam Lessin argue that larger companies will capture most of the AI market share, as AI can easily be integrated into existing businesses and distribution patterns. I would thus pose the question - why would sizeable companies need to use the state-of-the-art, large-company fed API or plugin-based LLM when they can just roll their own in-house, or work with smaller players over whom they may hold more leverage?

Cue Google’s leaked internal panic document.

Google’s Internal Panic Document

On Thursday May 4th, 2023, a document leaked from a Google Employee via a Discord server, which essentially stated that Google has no moat, and neither does OpenAI, against these Open Source LLM’s. The authors of the expose on the linked document state that they disagree with the leaker’s opinion, but the leaker’s opinion was essentially as follows:

The author emphasizes that neither their company nor OpenAI are well-positioned to win the AI arms race due to the rapid advancements made by the open-source community. Open-source projects have tackled several significant challenges, such as running foundation models on smartphones, enabling scalable personal AI, and training multimodal models quickly.

While proprietary models still have a slight quality advantage, open-source models are closing the gap, offering faster, more customizable, private, and cost-effective solutions.

“We have no moat, and neither does OpenAI”

-Leaked Quote from Google Employee

The above realizations leads to several implications:

Companies should learn from and collaborate with external parties, prioritizing third-party integrations.

People won't pay for restricted models if free, unrestricted alternatives are comparable in quality; companies need to reassess their value propositions.

Giant models hinder progress; smaller, quickly iterated models should be prioritized, especially considering the capabilities of the sub-20 billion parameter range.

Looking Forward - Open Source vs. Centralized Services

I’m an advocate for attempting to bottle up a proposition into a measurable map which can be followed, and setting a threshold by which we can take bets upon whether that particular transition occurred as a way to think more deeply about the subject and ideally monitor whether something actually, “happened,” rather than just discussing it in general. That being said I will admit at this point it’s so early in the game that it’s hard to define how it’s possible that open source could win or lose this game. That being said, I set up a market on Manifold.Markets and invite anyone who would like to participate to discuss how we could actually set verifiable, objective, third party metrics to follow this trend. So to close out, here’s the market: